In iOS 18.2, the characteristic will likely be totally non-compulsory

In accordance with a brand new report, the up to date characteristic won’t solely blur out any nude pictures and movies in messaging chats but additionally give youngsters the facility to flag these messages straight to Apple.Proper now, Apple’s security options on iPhones mechanically spot any nude pictures and movies that children would possibly ship or obtain by means of iMessage, AirDrop, FaceTime, and Pictures. This detection occurs proper on the machine to maintain issues personal.

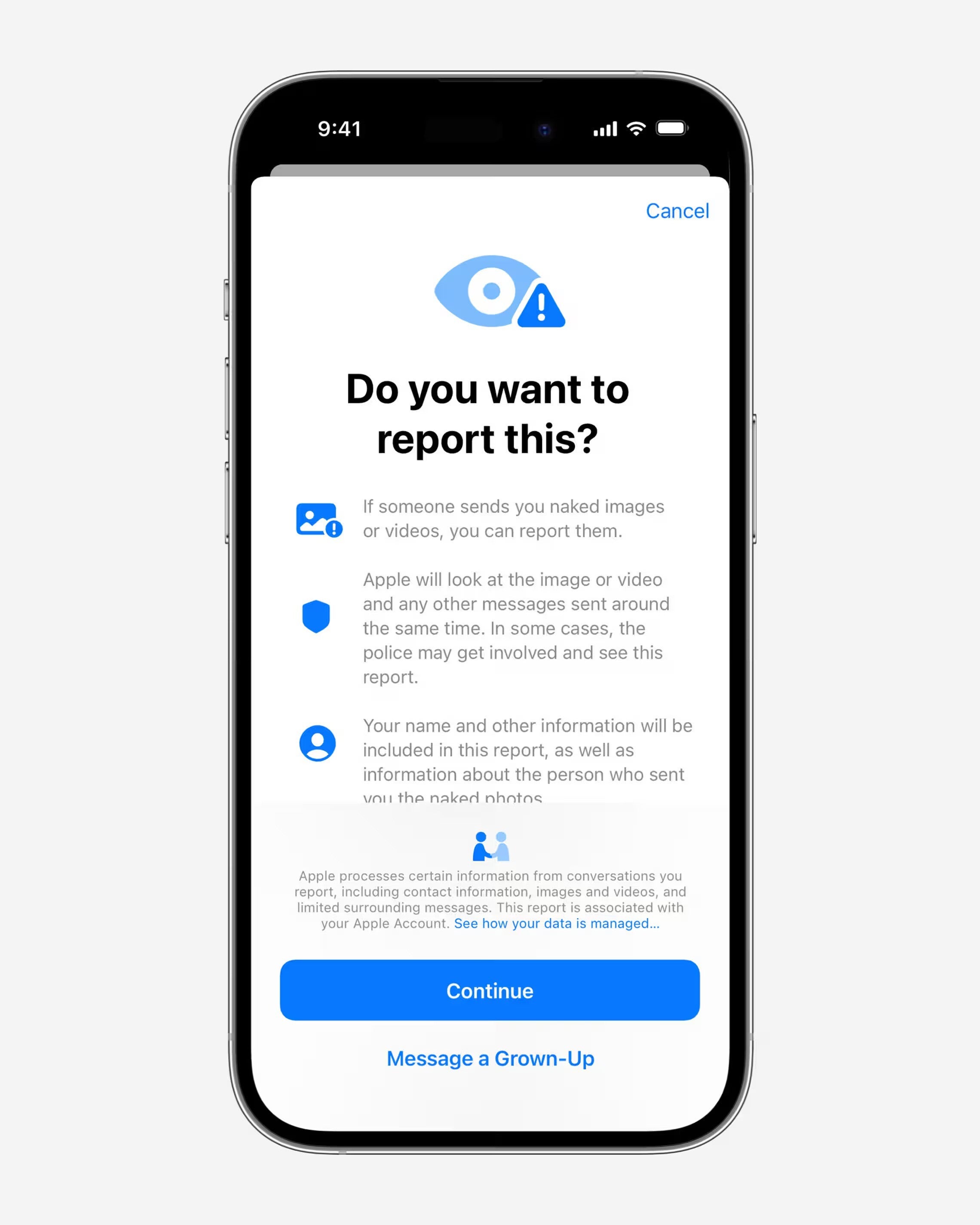

With the present settings, if nudity is detected, youngsters see a few intervention popups explaining the best way to attain out to authorities and advising them to inform a dad or mum or guardian. After the replace, when the system identifies nudity, a brand new popup will pop up, permitting customers to report the pictures and movies on to Apple. From there, Apple may cross that data on to the authorities.

A brand new popup will seem, permitting you to report the pictures and movies straight to Apple. | Picture credit score – Apple

The machine will generate a report that features the pictures or movies in query, together with the messages despatched proper earlier than and after the nudity was detected. It will additionally collect contact info from each accounts, and customers could have the choice to fill out a kind detailing what went down.

As soon as Apple will get the report, it will overview the content material. Primarily based on that evaluation, the corporate can take motion on the account, which could contain disabling the consumer’s capability to ship messages by way of iMessage and reporting the incident to regulation enforcement.

This characteristic is now being rolled out in Australia as a part of the iOS 18.2 beta, with plans to go world afterward. Plus, it is anticipated to be non-compulsory for customers.

Apple had beforehand raised issues that the draft code would not safeguard end-to-end encryption, placing everybody’s messages vulnerable to mass surveillance. Ultimately, the Australian eSafety commissioner softened the regulation, permitting corporations to suggest different options for addressing little one abuse and terror content material with out sacrificing encryption.

Apple has drawn heavy criticism from regulators and regulation enforcement worldwide for its reluctance to regulate end-to-end encryption in iMessage for authorized functions. Nevertheless, with regards to little one security, I consider more durable measures are generally essential, and legal guidelines like these purpose to handle that very challenge.