A Redditor has found built-in Apple Intelligence prompts contained in the macOS beta, by which Apple tells the Sensible Reply function to not hallucinate.

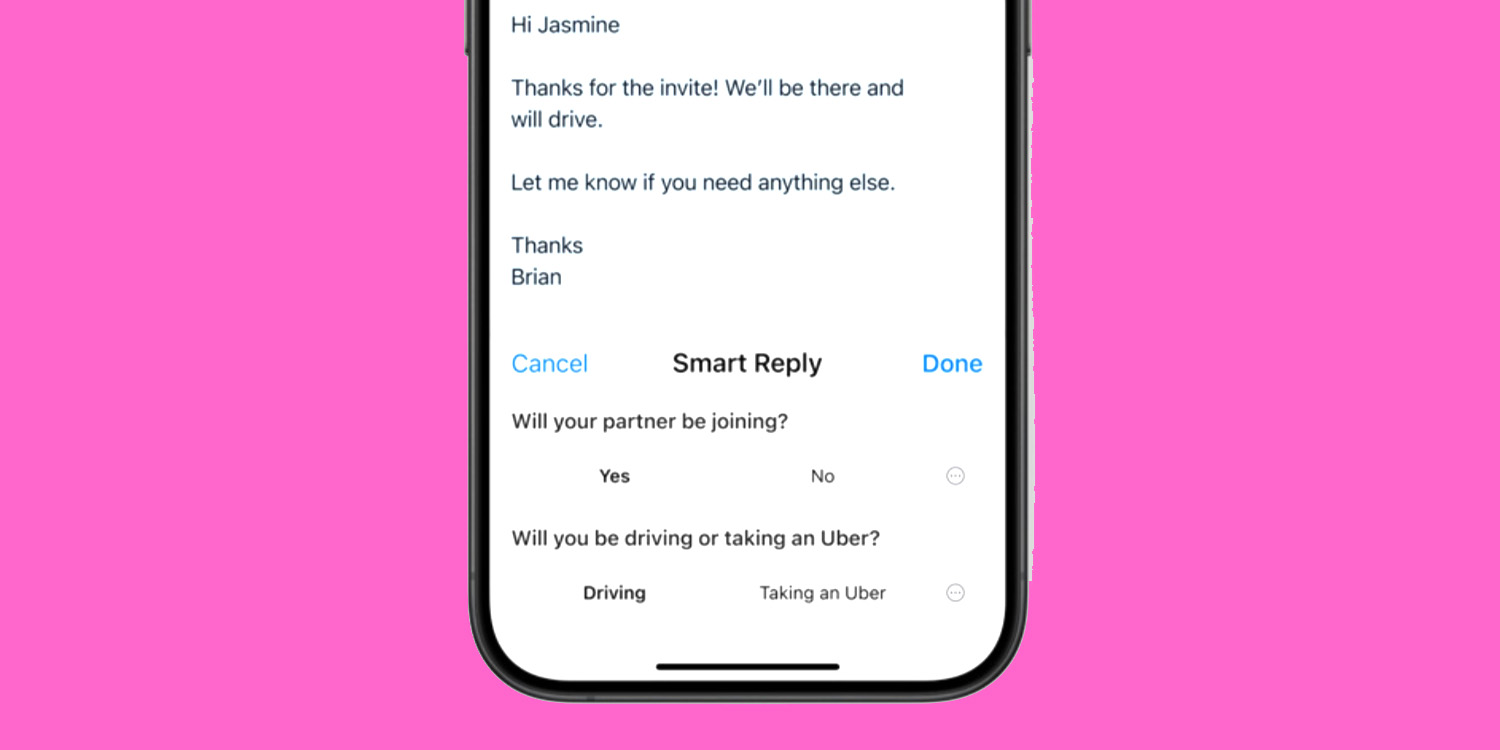

Sensible Reply helps you reply to emails and messages by checking the questions requested, prompting you for the solutions, after which formulating a reply …

Sensible Reply

Right here’s how Apple describes the function:

Use a Sensible Reply in Mail to shortly draft an e mail response with all the correct particulars. Apple Intelligence can determine questions you have been requested in an e mail and provide related choices to incorporate in your response. With a number of faucets, you’re able to ship a reply with key questions answered.

Constructed-in Apple Intelligence prompts

Generative AI programs are advised what to do by textual content often known as prompts. A few of these prompts are offered by customers, whereas others are written by the developer and constructed into the mannequin. For instance, most fashions have built-in directions, or pre-prompts, telling them to not counsel something which may hurt individuals.

Redditor devanxd2000 found a set of JSON recordsdata within the macOS 15.1 beta which seem like Apple’s pre-prompt directions for the Sensible Reply function.

You’re a useful mail assistant which may help id related questions from a given mail and a brief reply snippet.

Given a mail and the reply snippet, ask related questions that are explicitly requested within the mail. The reply to these questions might be chosen by the recipient which is able to assist cut back hallucination in drafting the response.

Please output high questions together with set of potential solutions/choices for every of these questions. Don’t ask questions that are answered by the reply snippet. The questions must be brief, not more than 8 phrases. Give your output in a json format with a listing of dictionaries containing query and solutions because the keys. If no query is requested within the mail, then output an empty listing. Solely output legitimate json content material and nothing else.

‘Don’t hallucinate’

The Verge discovered particular directions, telling the AI to not halluctinate:

Don’t hallucinate. Don’t make up factual info.

9to5Mac’s Take

The directions to not halluctinate appear … optimistic! The rationale generative AI programs hallucinate (that’s, make up pretend info) is that they haven’t any precise understanding of the content material, and due to this fact no dependable technique to know whether or not their output is true or false.

Apple absolutely understands that the outputs of its AI programs are going to return underneath very shut scrutiny, so maybe it’s only a case of “each little helps”? With these prompts, at the very least the AI system understands the significance of not making up info, even when it might not be able to conform.

Picture: 9to5Mac composite utilizing a picture from Apple

FTC: We use revenue incomes auto affiliate hyperlinks. Extra.